Transformer

| Created | |

|---|---|

| Tags | NN |

Transformer is the first sequence transduction model using attention mechanism based on multi-headed self-attention layers.

The Transformer model represents a significant shift in the approach to sequence-to-sequence tasks in machine learning, particularly in natural language processing (NLP). Introduced by Vaswani et al. in the paper "Attention is All You Need" in 2017, the Transformer model has set new standards for tasks such as machine translation, text summarization, and more recently, has been foundational for the development of large language models like GPT (Generative Pretrained Transformer) and BERT (Bidirectional Encoder Representations from Transformers).

Key Features of the Transformer

- Self-Attention Mechanism: At the core of the Transformer architecture is the self-attention mechanism, which allows the model to weigh the importance of different words within the input data. Unlike previous models that processed data sequentially (like RNNs and LSTMs), the Transformer processes all words or tokens in parallel. This dramatically improves efficiency and allows the model to capture complex dependencies in the data.

- Positional Encoding: Since the Transformer does not inherently process data in sequence, it uses positional encodings to give the model information about the order of words in the sentence. This information is added to the input embeddings before processing by the model.

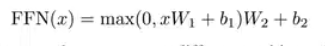

- Layered Structure: The Transformer model consists of an encoder and a decoder, each made up of multiple layers. Each layer in both the encoder and decoder contains a self-attention mechanism and a feed-forward neural network. In the encoder, the self-attention mechanism helps the model to look at other words in the input sentence as it encodes a specific word. In the decoder, the attention mechanism is slightly different, allowing the model to focus on different parts of the input sentence and the output it has generated so far.

- No Recurrence: Unlike its predecessors, the Transformer does not use recurrent layers. This absence of recurrence enables much more parallelization during training and significantly speeds up the training process.

Applications

The Transformer model has been highly successful in a wide range of NLP tasks, including but not limited to:

- Machine Translation: Translating text from one language to another.

- Text Summarization: Creating concise summaries of longer texts.

- Name Entity Recognition (NER): Identifying and classifying key elements in text into predefined categories.

- Question Answering: Building models that can answer questions based on a given context.

- Sentiment Analysis: Determining the sentiment expressed in a piece of text.

Impact

The introduction of the Transformer model has had a profound impact on the field of NLP, leading to the development of models that significantly outperform previous state-of-the-art models on a variety of tasks. Its architecture is the foundation of many subsequent models that have pushed the boundaries of what's possible in machine learning and artificial intelligence.

The Transformer's efficiency, scalability, and effectiveness have made it a cornerstone of modern NLP research and applications, influencing the direction of future developments in the field.’

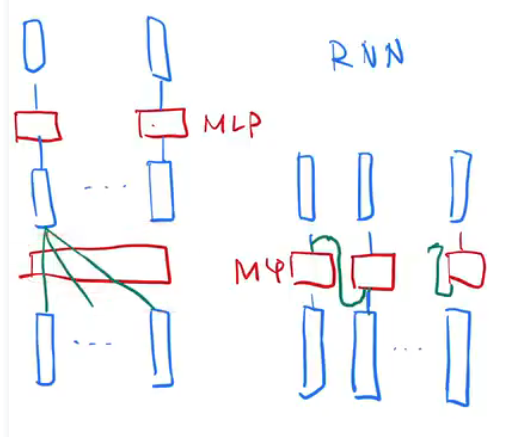

Compare to RNN

RNN: hidden state, sequential input, cannot do parallel, computing cost / lose the ht when the inputs are too long

Attention: applied in encoder to decoder

CNN: window and see the whole sequence at the very end of conv.

Multi-head: mimic the convolution network to get the multiple output

Model architecture:

Encoder:

two hyperparameters: N=6 layers, output dimension = 512, 2 sublayers, layer Norm

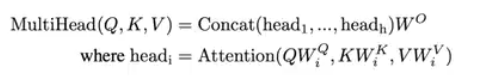

Multi-head attention layer, feed forward attention

Decoder:

two hyperparameters: N=6 layers, output dimension = 512, 3 sublayers, layer Norm

Masked multi-head attention layer, multi-head attention layer, feed forward attention

Scaled Dot-Product Attention

devided by dk before using softmax and prevent the gradient from vanishing

Mask, given a small number for i≥t, like -e^10, softmax → 0, multihead→ h, different w for v k q,

h = 8, dk = dv = dmodel/h

Position wise feed forward networks (MLP)

x 512 → Linear layer (2048), relu,→ linear layer →512

Self Attention:

Summary:

- attention, position embedding, multi head MLP

PyTorch Transformer Block Example

First, ensure you have PyTorch installed in your environment. You can install PyTorch by following the instructions on the official PyTorch website.

Here's a basic example of a Transformer model:

import torch

import torch.nn as nn

class TransformerBlock(nn.Module):

def __init__(self, embed_size, heads, dropout, forward_expansion):

super(TransformerBlock, self).__init__()

self.attention = nn.MultiheadAttention(embed_dim=embed_size, num_heads=heads)

self.norm1 = nn.LayerNorm(embed_size)

self.norm2 = nn.LayerNorm(embed_size)

self.feed_forward = nn.Sequential(

nn.Linear(embed_size, forward_expansion * embed_size),

nn.ReLU(),

nn.Linear(forward_expansion * embed_size, embed_size),

)

self.dropout = nn.Dropout(dropout)

def forward(self, value, key, query, mask):

attention = self.attention(query, key, value, attn_mask=mask)[0]

x = self.dropout(self.norm1(attention + query))

forward = self.feed_forward(x)

out = self.dropout(self.norm2(forward + x))

return out

# Example usage

embed_size = 256 # Size of the embedding vector

heads = 8 # Number of attention heads

dropout = 0.1 # Dropout rate

forward_expansion = 4 # Expansion factor for the feed forward network

# Create a Transformer block

transformer_block = TransformerBlock(embed_size, heads, dropout, forward_expansion)

# Dummy inputs for demonstration (batch_size, sequence_length, embed_size)

value = torch.rand((5, 10, embed_size))

key = torch.rand((5, 10, embed_size))

query = torch.rand((5, 10, embed_size))

mask = None # Assuming no mask for simplicity

# Forward pass through the transformer block

out = transformer_block(value, key, query, mask)

print(out.shape) # Should match the input shape

This example defines a TransformerBlock class, incorporating self-attention and feed-forward networks along with normalization and dropout for regularization. The forward method outlines how data flows through the block, taking value, key, query, and an optional mask as inputs and producing the transformed output.

Remember, this is a basic building block. A complete Transformer model for tasks like machine translation would require stacking multiple such blocks into an encoder and decoder architecture, along with embedding layers and a final linear layer for predictions.

For a full-fledged application, you'd also need to handle aspects like positional encoding, data preprocessing, training loop setup, and evaluation. These components are crucial for developing working models capable of tackling real-world NLP tasks.

Predicting future steps:

- avoid to use the future steps:

- masking

Question:

1. Transformer为何使用多头注意力机制?(为什么不使用一个头)

Introduce the diversity of the learning mechanism, like CNN using multi dimensional filter to option different features, improve the robustness

2.Transformer为什么Q和K使用不同的权重矩阵生成,为何不能使用同一个值进行自身的点乘? (注意和第一个问题的区别)

if we don’t use weight, there is no learning step

3.Transformer计算attention的时候为何选择点乘而不是加法?两者计算复杂度和效果上有什么区别?

dot product is faster, that can use matrix manipulation.

4.为什么在进行softmax之前需要对attention进行scaled(为什么除以dk的平方根),并使用公式推导进行讲解

q*k can be very large, for softmax, if the value is too large, softmax leads to assign 1 to the value and gradient will be very small, if meanq, meanp is 0, dot product is 0, var is dk

5.在计算attention score的时候如何对padding做mask操作?

given a very large negative number on k,

6.为什么在进行多头注意力的时候需要对每个head进行降维?(可以参考上面一个问题)

output will concatenate in the next layer,

7.大概讲一下Transformer的Encoder模块?

encoder consists of 6 blocks and each block contains 2 layers, multihead self attention layer and scaled feed-forward network. each sublayer consists of residual and layernorm

8.为何在获取输入词向量之后需要对矩阵乘以embedding size的开方?意义是什么?

prevent the value too large

9.简单介绍一下Transformer的位置编码?有什么意义和优缺点?

using sin and cos position embeding, fixed length, robustness, parallel computing.

10.你还了解哪些关于位置编码的技术,各自的优缺点是什么?

11.简单讲一下Transformer中的残差结构以及意义。

12.为什么transformer块使用LayerNorm而不是BatchNorm?LayerNorm 在Transformer的位置是哪里?

13.简答讲一下BatchNorm技术,以及它的优缺点。

14.简单描述一下Transformer中的前馈神经网络?使用了什么激活函数?相关优缺点?

15.Encoder端和Decoder端是如何进行交互的?(在这里可以问一下关于seq2seq的attention知识)

16.Decoder阶段的多头自注意力和encoder的多头自注意力有什么区别?(为什么需要decoder自注意力需要进行 sequence mask)

17.Transformer的并行化提现在哪个地方?Decoder端可以做并行化吗?

self attention, multi-head attention, ffn, layers

19.Transformer训练的时候学习率是如何设定的?Dropout是如何设定的,位置在哪里?Dropout 在测试的需要有什么需要注意的吗?

20解码端的残差结构有没有把后续未被看见的mask信息添加进来,造成信息的泄露。