Hinge loss - SVM

| Created | |

|---|---|

| Tags | Loss |

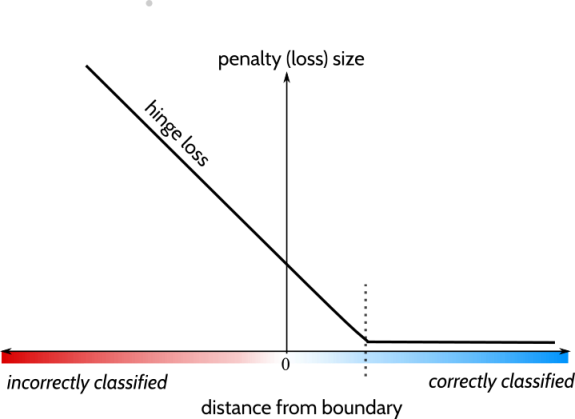

The hinge loss is a special type of cost function that not only penalizes misclassified samples but also correctly classifies ones that are within a defined margin from the decision boundary.

Hinge loss is a loss function primarily used in binary classification tasks, particularly in support vector machines (SVMs) and linear classifiers. It is designed to maximize the margin between classes by penalizing misclassified samples. The hinge loss function is defined as:

where:

- is the true class label (-1 or 1 for binary classification),

- is the predicted score, often the output of a linear classifier,

- The function returns 0 if , otherwise returns x.

Interpretation:

- Hinge loss penalizes misclassifications by the margin between the predicted score and the true label. If the predicted score is on the correct side of the decision boundary (i.e., \(y_i \cdot \hat{y}_i > 1\)), the loss is zero. Otherwise, the loss increases linearly with the magnitude of the margin violation.

Applications:

- Support Vector Machines (SVMs):

- Hinge loss is commonly used as the loss function in SVMs, where the goal is to find the hyperplane that maximizes the margin between classes. SVMs aim to minimize the hinge loss while maximizing the margin, leading to a robust decision boundary.

- Binary Classification:

- Hinge loss can be used in binary classification tasks, especially when linear classifiers such as linear SVMs or logistic regression are employed. It encourages models to correctly classify samples with a margin of at least one.

- Multi-class Classification:

- Hinge loss can also be extended to multi-class classification problems using techniques such as one-vs-all or one-vs-one approaches. In multi-class settings, the hinge loss is applied to each class separately, and the overall loss is the sum of individual class losses.

Python Implementation (using scikit-learn):

from sklearn.metrics import hinge_loss

# Example ground truth and predicted scores

y_true = [1, -1, 1, 1, -1]

y_scores = [0.5, -0.5, 1.2, 0.8, -1.2] # Predicted scores (output of linear classifier)

# Calculate hinge loss

loss = hinge_loss(y_true, y_scores)

print("Hinge Loss:", loss)

In this example, y_true contains the true class labels (-1 or 1), and y_scores contains the predicted scores from a linear classifier. We calculate the hinge loss using the hinge_loss function from scikit-learn's metrics module. Lower hinge loss values indicate better model performance, where correctly classified samples have a margin of at least one.

import numpy as np

from sklearn.metrics import hinge_loss

def hinge_fun(actual, predicted):

# replacing 0 = -1

new_predicted = np.array([-1 if i==0 else i for i in predicted])

new_actual = np.array([-1 if i==0 else i for i in actual])

# calculating hinge loss

hinge_loss = np.mean([max(0, 1-x*y) for x, y in zip(new_actual, new_predicted)])

return hinge_loss

# case 1

actual = np.array([1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1])

predicted = np.array([0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 0, 1])

new_predicted = np.array([-1 if i==0 else i for i in predicted])

print(hinge_loss(actual, new_predicted)) # sklearn function output 0.4

print(hinge_fun(actual, predicted)) # my function output 0.4

# case 2

actual = np.array([1, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1])

predicted = np.array([0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1])

new_predicted = np.array([-1 if i==0 else i for i in predicted])

print(hinge_loss(actual, new_predicted)) # sklearn function output 0.5

print(hinge_fun(actual, predicted)) # my function output 0.5